Social media: How can governments regulate it?

The government is considering ways to regulate social media companies, including Facebook, YouTube and Instagram, over harmful content.

The renewed focus comes after links were made between the suicide of teenager Molly Russell and her exposure to images of self-harm on Instagram.

The photo and video-sharing social network site has said it is making further changes to its approach and won’t allow any graphic images of self-harm.

Self-governance

At the moment, when it comes to graphic content, social media largely relies on self-governance. Sites such as YouTube and Facebook have their own rules about what’s unacceptable (video, pictures or text) and the way that users are expected to behave towards one another.

This includes content that promotes fake news, hate speech or extremism, or causes mental health problems.

If the rules are broken, it is up to social media firms to remove the offending material.

YouTube has defended its record on removing inappropriate content. In a recent blog post it said that, while it faced an “immense challenge”, it had a “core responsibility” to remove content that violates its terms and conditions.

The video-sharing site said that 7.8m videos were taken down between July and September 2018, with 81% of them automatically removed by machines, and three-quarters of those clips never receiving a single view.

Globally, YouTube employs 10,000 people in monitoring and removing content, as well as policy development.

Facebook, which owns Instagram, told the BBC that it has 30,000 people around the world working on safety and security. It said that it removed 15.4m pieces of violent content between October and December, up from 7.9m in the previous three months.

Some content can be automatically detected and removed before it is seen by users. In the case of terrorist propaganda, Facebook says 99.5% of all the material taken down between July and September was done by “detection technology”.

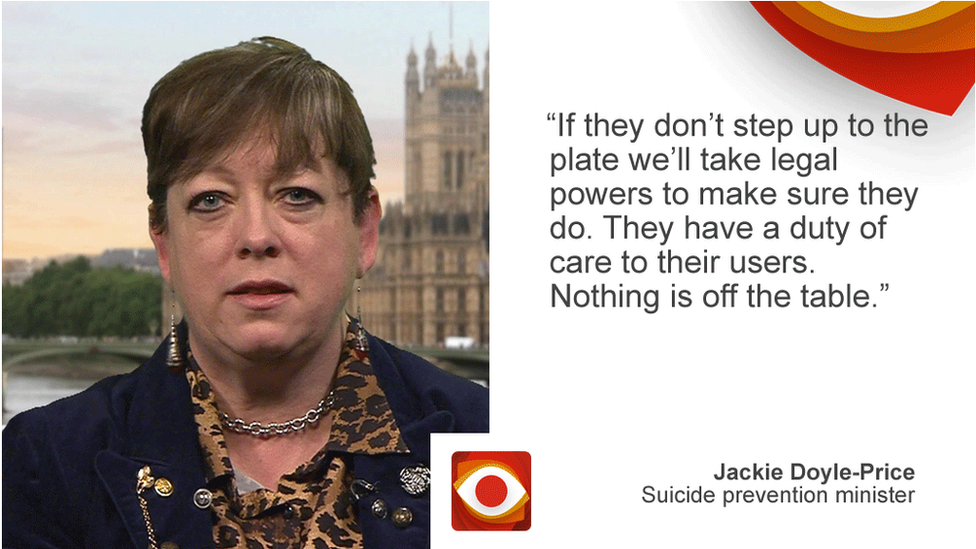

‘Duty of care’

If illegal content, such as “revenge pornography” or extremist material, is posted on a social media site, it will be the person who posted it, rather than the social media companies, who is most at risk of prosecution.

This is a situation that needs to change, according to Culture Minister Margot James. She says she wants the government to bring in legislation that will force social media platforms to remove illegal content and “prioritise the protection of users, especially children, young people and vulnerable adults”.

Image copyright

Getty Images

That view is echoed by the children’s commissioner for England, Anne Longfield. She has also called for the government to make it clear that social media companies have a duty of care for children using their sites.

“It would mean online providers like Facebook, Snapchat, Instagram and others would owe a duty to take all reasonable and proportionate care to protect children from any reasonably foreseeable harm,” she said.

“Harmful content would include images around bullying, harassment and abuse, discrimination or hate speech, violent or threatening behaviour, glorifying illegal or harmful activity, encouraging suicide or self-harm and addiction or unhealthy body images.”

Facebook has acknowledged there is more it could do and says it is “looking at ways to work with governments and regulation in areas where we don’t think it makes sense for a private company to set the rules on its own”.

Image copyright

Getty Images

So, what do other countries do? Some are taking action to protect users, others have been criticised for going too far.

Germany

Germany’s NetzDG law came into effect at the beginning of 2018, applying to companies with more than two million registered users in the country.

They were forced to set up procedures to review complaints about content they are hosting and remove anything that is clearly illegal within 24 hours.

Individuals may be fined up to €5m ($5.6m; £4.4m) and companies up to €50m for failing to comply with these requirements.

In the first year of the new law there are reported to have been 714 complaints from users who said that online platforms had not deleted or blocked illegal content within the statutory period.

The Federal Ministry of Justice confirmed to the BBC that the figure had been considerably below the 25,000 complaints a year it had been expecting and that there have been no fines issued so far.

Russia

Under Russia’s data laws from 2015, social media companies are required to store any data about Russians on servers within the country.

Its communications watchdog is taking action against Facebook and Twitter for not being clear about how they planned to comply with this.

Russia is also considering two laws similar to Germany’s example, requiring platforms to take down offensive material within 24 hours of being alerted to it and imposing fines on companies that fail to do so.

China

Sites such as Twitter, Google and WhatsApp are blocked in China. Their services are provided instead by Chinese applications such as Weibo, Baidu and WeChat.

Chinese authorities have also had some success in restricting access to the virtual private networks that some users have employed to bypass the blocks on sites.

The Cyberspace Administration of China announced at the end of January that in the previous six months it had closed 733 websites and “cleaned up” 9,382 mobile apps, although those are reported to be more likely to be illegal gambling apps or copies of existing apps being used for illegal purposes than social media.

China has hundreds of thousands of cyber-police, who monitor social media platforms and screen messages that are deemed to be politically sensitive.

Some keywords are automatically censored outright, such as references to the 1989 Tiananmen Square incident.

New words that are seen as being sensitive are added to a long list of censored words and are either temporarily banned, or are filtered out from social platforms.

Image copyright

Getty Images

Cardboard cut-outs were used at demonstrations in Washington and Brussels last year

European Union

The EU is considering a clampdown, specifically on terror videos.

Social media platforms would face fines if they did not delete extremist content within an hour.

The EU also introduced the General Data Protection Regulation (GDPR) which set rules on how companies, including social media platforms, store and use people’s data.

But it is another proposed directive that has worried internet companies.

Article 13 of the copyright directive would put the responsibility on platforms to make sure that copyright infringing content is not hosted on their sites.

Previous legislation has only required the platforms to take down such content if it is pointed out to them. Shifting the responsibility would be a big deal for social media companies.

What do you want BBC Reality Check to investigate? Get in touch